Why should I care about this?

Memory-safety is extremely important in the context of software security, with the majority of security vulnerabilities being caused by memory-related mistakes. This trend seems to be consistent over time, with efforts to fix memory-safety issues not reducing the prevalence of memory-related exploits. So far, the only effective way to address this seems to be the use of memory-safe languages. In fact, there are many recent examples of security-critical projects switching from unsafe languages like C/C++. The examples that stand out the most to me are the inclusions of Rust into the Linux kernel and Microsoft Windows.

Despite this, memory-saftey is a topic that doesn’t always get the attention it deserves, especially among those of us who write their code in languages that are considered memory-safe (like Java or other JVM languages). But even then, memory-safety still affects us directly in the form of CVEs in our dependencies and indirectly via the trade-offs that our progamming languages make to achieve it.

In this article I want to provide some context to the “safe” Java world. We’ll examine how Java achieves memory-safety and what the costs of this safety are, especially when compared with C/C++, which provide basically no safety guarantees.

What exactly is memory-safety?

To start off, you might have the legitimate question what memory-safety even is. And especially if Java and other memory-safe language are all that you have been exposed to so far, you might be wondering what an unsafe language even looks like.

Memory-safety, as it relates to programming languages, is the property of a language to guarantee that a certain class of memory access errors do not happen. Consider the following example in Java:

var array = new int[] {1, 2, 3};

System.out.println(array[3]); // crashes with an exception!Running this code will cause the following exception:

Exception in thread "main" java.lang.ArrayIndexOutOfBoundsException: Index 3 out of bounds for length 3Compare this with the respective version in C++:

int array[] = { 1, 2, 3 };

std::cout << array[3] << '\n'; // Compiles and runs fineOn my machine this gives the following output (sometimes, every run is different):

32642

Even though we’re accessing a value that is not part of the array, we are able to read something (in my case “32642”, but this depends on the system/compiler/program). In our example, this is some value from the stack, because our array is allocated there.

This is also not just restricted to reading: A piece of badly written code can just as well overwrite basically any value on the stack or heap, including the contents of other variables and control structures like return addresses. Apart from the security implications, this can also lead to very subtle and extremely hard to track down bugs.

What other safety features does Java have?

As another, less obvious example, consider the following Java code:

var vector = new ArrayList<Integer>(Arrays.asList(1, 2, 3));

var first = vector.get(0);

vector.add(4);

System.out.println("First value: " + first);Looking at this code, most wouldn’t think twice about it. It is maybe somewhat unusual, but works without problems:

First value: 1

In contrast, the respective C++ version of this is a severe bug, which (potentially) reads invalid memory:

std::vector<int> vector = {1, 2, 3};

int* first = &vector[0];

vector.push_back(4);

std::cout << "First value: " << *first << '\n'; // Very bad! Potentially read of invalid memory!On my system, this doesn’t crash (though it could), but only produces an invalid result:

First value: 1698178957

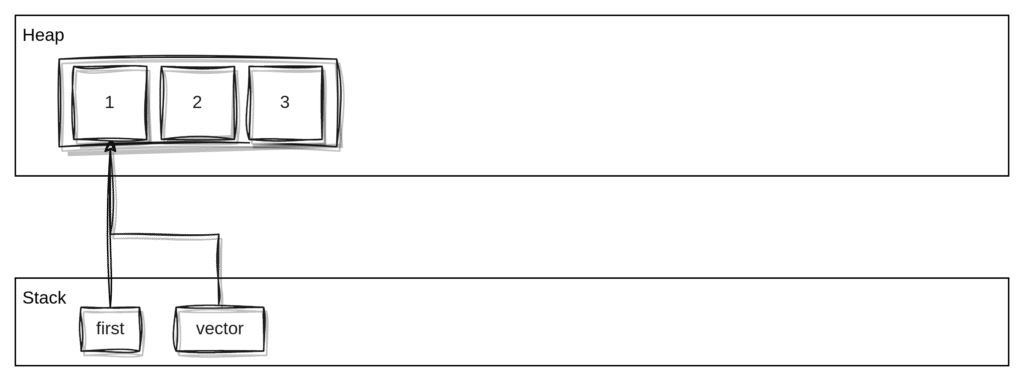

What is going on here? The issue is that std::vector, just like Java’s ArrayList, is implemented using an array. That array is allocated somewhere on the heap and has a specific size, like in the picture below. The variable first points to the location in memory where the first element in the array is located:

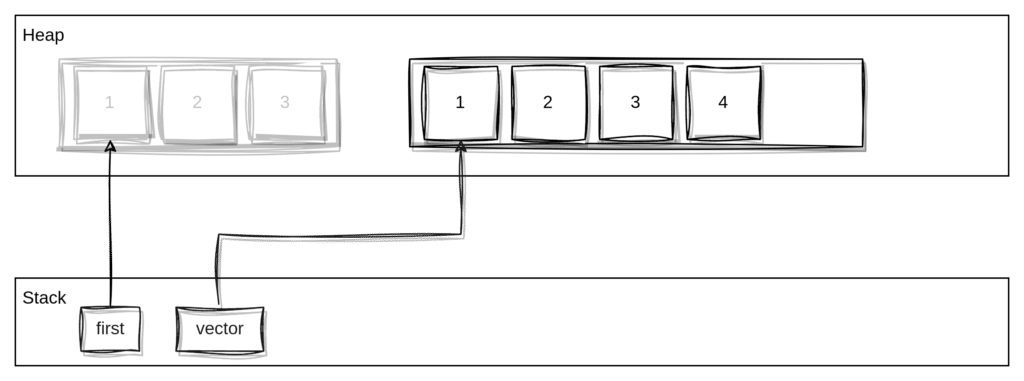

Arrays always have a fixed size and as we add more elements to the vector, the internal array will eventuelly need to be resized (i.e. recreated at a new location and the old memory freed), leaving the first variable pointing into invalid memory. Accessing first is undefined behaviour, which means that we might get the correct value, we might get a different value, or we might crash the program:

In the Java world, none of this affects us, since the way in which memory and references are handled is fundamentally different. Memory will never be invalidated as long as there is a reference to it, and we can always assume that our references are valid (as long as they are not null at least, but that’s a whole different story) and memory will automatically be collected when it is no longer being referenced anywhere.

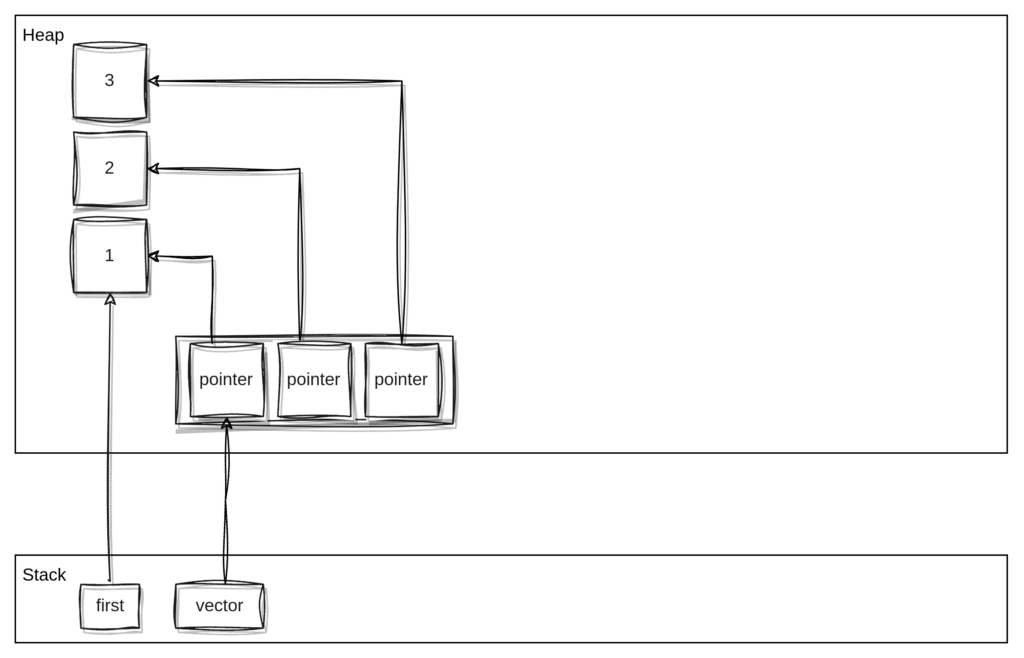

Additionally, the values inside a Java list are actually not stored inside the list itself, but somewhere else inside the heap. The list will only contain references to those values (that’s why you cannot create a List<int> in Java, it has to be List<Integer>):

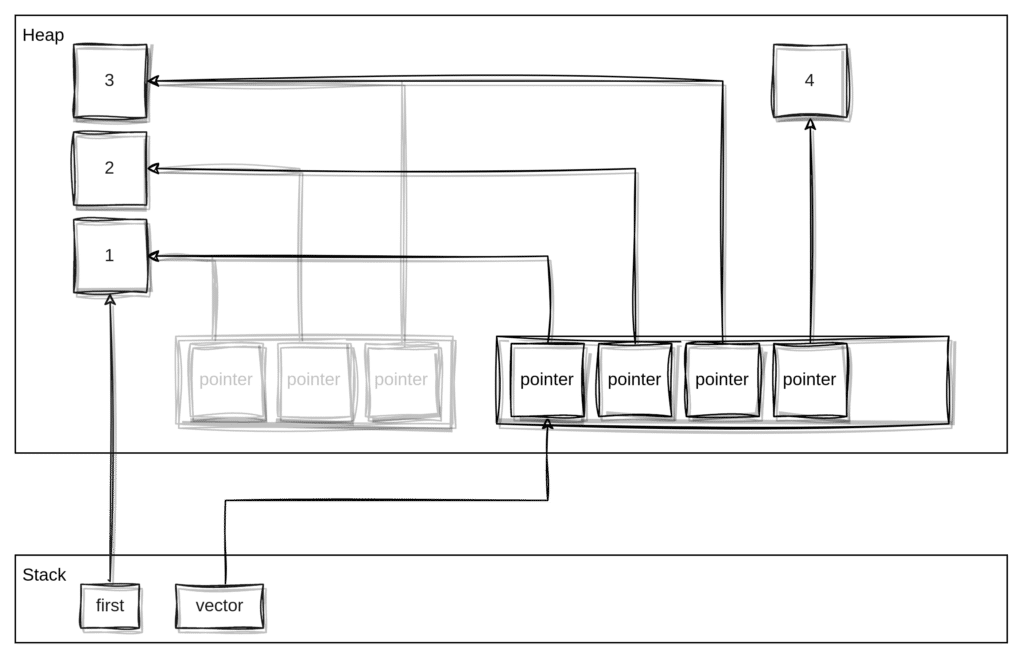

Same as with the C++ `vector`, adding another element to the `ArrayList` will potentially resize it (if there isn’t enough space in the array for another element), which will re-allocate it at a new location. But this time, the `first` variable still references valid memory, because the contents of the list are not actually stored inside it:

The greyed-out old array is actually not invalid memory in this case, but just not referenced anymore, so it will be collected during the next run of the garbage collector.

What are the costs of this memory-safety?

Good things rarely are free, so it stands to reason that we’re paying a price for the safety we gain in Java. And as you’ve probably guessed, we usually pay this price with performance and memory consumption. Just from the previous example, some of these costs should already be obvious.

Runtime checks

Java uses run-time checks to ensure that ill-written code does not access memory it is not supposed to, like for example reading/writing beyond the last element of an array. Basically, everytime an element in an array is accessed, the index of the operation is checked to make sure that it is not out of bounds (though the JVM might be able to eliminate some unnecessary checks).

In C and C++, no such check is performed at all and the data is accessed directly. The performance gained by omitting bound checks is usually negligible for most real-world applications, but can be significant in data-heavy scenarios, where data is accessed in tight loops (this and the cache inefficiencies discussed below are basically why nobody writes machine learning algorithms in Java).

Memory layout and cache efficiency

As we’ve seen before, Java stores arrays and lists separately from their contents in memory (at least for non-primitive values, but more about that later). This uses more memory when compared to the “flat” layout a language like C++ might use, and also speads out the data over a larger area in memory, which makes accessing the data less cache efficient.

The same also applies to other data structures as well. Consider the following Java code:

public class Person {

int age;

String name;

String address;

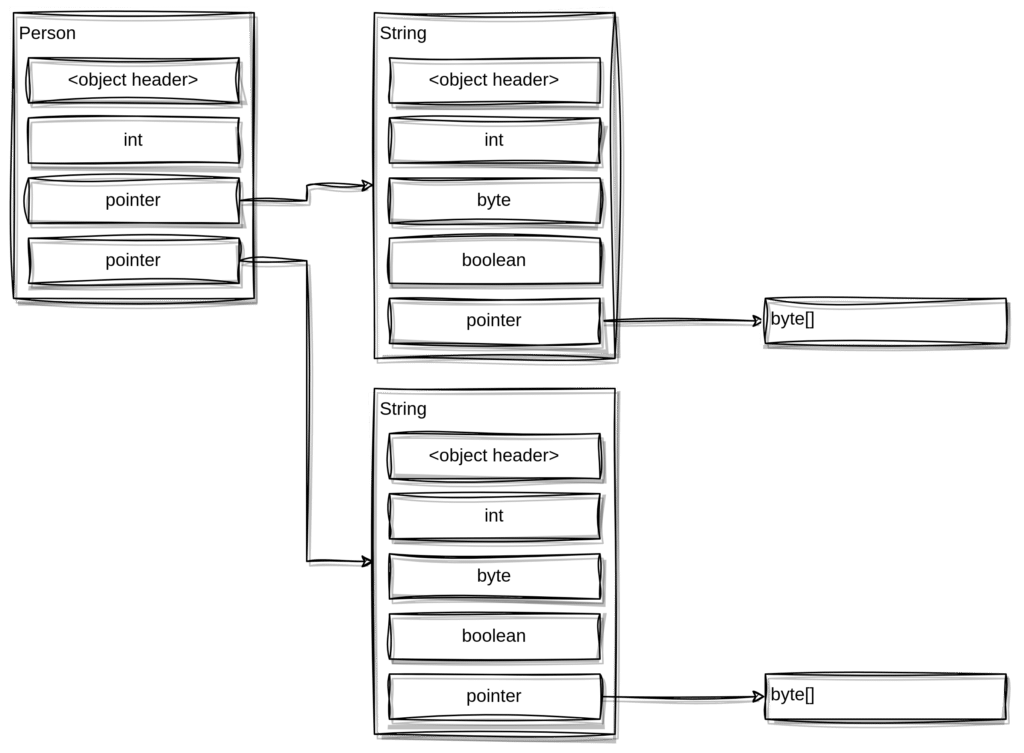

}An instance of this class will have the following layout on the heap:

The class, even though it is very simple, is spread out over memory in several objects, which are surprisingly large (`String` especially contains a lot of additional fields).

Also note the difference in how primitive types (like `int` and `byte[]`) are treated when compared to “normal” object references, which always start with an object header. The header contains class-information that can be used for run-time instance checks and for reflection, as well as JVM internals like a “mark” field that can be used by the mark-and-sweep garbage collector. Referenced objects can also never be embedded into the object they are referenced from (as that would interfere with garbage collection), while primitive values with a known size (like int, byte, …) are embedded.

Now, I don’t want to go into too much detail here, especially because the layout of objects in memory is unspecified and might change between versions or different implementations of the JVM. I also left out some details, like field padding, for clarity’s sake. But if you are interested in learning more about the memory layout of JVM objects, I can recommend the article “Memory Layout of Objects in Java“, as well as the JOL library.

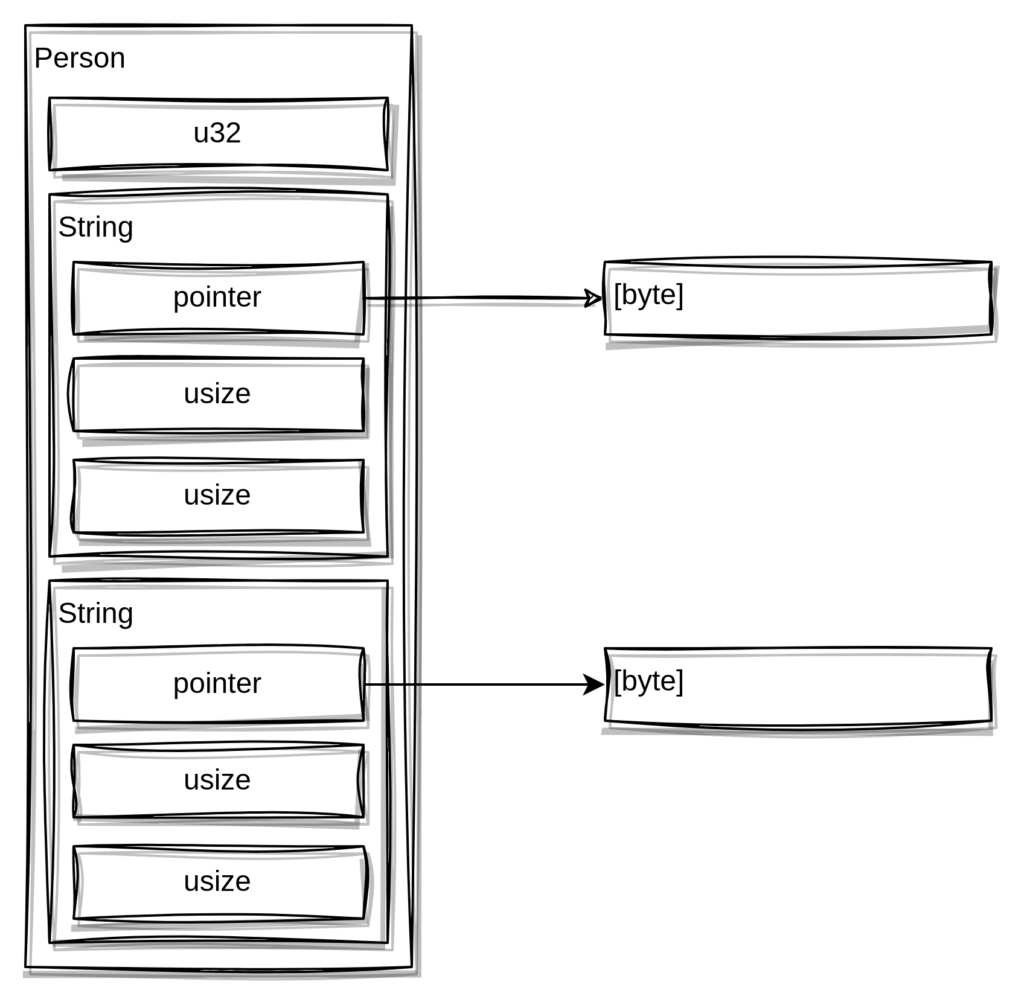

For comparison, let’s have a look at how the equivalent structure would be layed out in a language like C++ (this is the layout from Rust, but C++ would be very similar and the exact details don’t really matter for this blog post):

You can see that the overall structure is less spread out. There is also no attached metadata, because there is no runtime (like the JVM) that needs it. Anything that has a size known at compile-time (like our `String` objects) will be embedded into the object it belongs to. Only variable-size data, like our byte arrays, are located elsewhere im memory.

This all means that, depending on the situation and what optimizations the JVM is able to apply, Java will likely require more memory and be less cache efficient than a language like C++.

But things are not all bad: Java already has some support for cache-efficient data structures and there are ongoing efforts to extend this support. Arrays of primitive types (like int[] or float[], but not Integer[] or Float[]) are already layed out flat the same way C++ arrays are. For the future, there is Project Valhalla, which, among other things, aims to provide more complex primitive types that can be layed out flat in memory, much like in C++. For the interested, there is an easy to read overview of Valhalla on Baeldung.

JVM overhead

Another significant source of overhead is the runtime itself. For example, there is additional memory that the JVM allocates for internal use, i.e. memory that isn’t used by your application directly. This memory includes, among others, the code cache used during JIT compilation, memory required by the garbage collector, and native buffers. But apart from the memory overhead, there is also runtime overhead, which is mostly caused by the garbage collector and JIT compiler.

The JVM’s garbage collectors (there are several implementations for different situations) are highly optimized and generally only have comparatively low runtime cost. But they do cost something and will stop your application for a short time during the garbage collection, which might be unacceptable in some situations. There are GC implementations specially intended to mitigate the problems of GC pauses, but I’m not aware of any that can avoid them completely (except the No-Op collector, of course).

JIT compilation also comes with significant overhead, because (re-)compiling code during runtime is obviously not free. The full story is nuanced, though, because JIT compilers are able to perform optimizations that traditional static compilers simply cannot. They know the exact hardware a program is running on and can collect runtime statistics to detect code that can benefit the most from optimizations. This can compensate for some of the other runtime overhead we talked about before and is the reason why Java’s performance actually isn’t that bad when compared to other languages. This all depends heavily on the situation, but especially long-running applications are often able to amortize the startup cost of the JVM and benefit from the optimizations the JIT-compiler provides.

Where can we go from here?

Even though we’ve mostly looked at the costs of Java’s memory-safety so far, I don’t want to paint a negative picture of Java. It should be remembered that engineering anything, including programming languages, comes with trade-offs and that memory-safety is generally well worth a few downsides (which aren’t even that big in many situations). Nevertheless, it is valid to ask where Java’s approach can be improved and to have a look at how memory-safety is achieved elsewhere.

So I’d like to end this post with a list of interesting projects that use alternative approaches to what Java is using. The list mostly contains efforts to reduce the memory overhead of the JVM, because the runtime performance is generally adaquate and less problematic then its memory consumption:

- Project Valhalla: As we’ve already discussed, this OpenJDK project aims to bring features to the JVM and Java to make “flat” memory layouts, like in C/C++, possible.

- GraalVM is a JDK based on OpenJDK and implemented by Oracle. Among others, it contains the following interesting approaches:

- The JIT-compiler included in GraalVM is able to perform escape analysis to determine whether an object is ever accessed from outside of a specific context (most commonly a method). If not, then the data does not need to be heap allocated and can be placed on the stack. This has the following advantages:

- It speeds up allocation, because heap-allocation is an expensive operation, while stack-allocation is basically free.

- It is cache-efficient, because all the data a method needs can potentially be allocated together on the stack, instead of being spread over the heap.

- It bypasses the garbage collector, so that it has less work to do and can run less often, because data on the stack is automatically cleaned when a method returns.

- It allows the compiler more room for optimization. If an object is only used in the local function and never touched by any other part of the runtime (like the GC), then the compiler can potentially change the memory layout for that object to make it more efficient.

- GraalVM native image: Supports compiling JVM-based applications to native code. In the process, parts of the JVM’s runtime like the JIT compiler can be stripped, since it won’t be needed anymore. This usually results in significantly reducted memory usage, because those parts of the runtime do not need to be loaded into memory anymore.

- Compile-time optimizations applied by GraalVM native image: The compiler performs a reachability analysis for your code and removes everything that is not used by your application. This removes unused classes and functions, but (maybe surprisingly) also removes instance variables that are not used. Instances of classes that have been stripped like this will therefore need less space in memory. Depending on the application, this reduction can be significant.

- The JIT-compiler included in GraalVM is able to perform escape analysis to determine whether an object is ever accessed from outside of a specific context (most commonly a method). If not, then the data does not need to be heap allocated and can be placed on the stack. This has the following advantages:

- Rust goes even one step further and just doesn’t provide any runtime at all and therefore does not suffer any of the associated overhead. It still provides automatic memory managment, even without a garbage collector, so that users do not need to allocate or free memory manually. The way it achieves this is by using an innovative “ownership” system that allows the compiler to keep track of the lifetimes of objects and to decide at compile time when an object should be freed. The memory management code is then compiled into the program at the right locations and happens automatically with minimal overhead.